Is Gemma Capable of Building Multi-agent Applications in AutoGen?

A Quick Tutorial on Building "Gemma + AutoGen" Apps with Performance Evaluations

Big news every day! While I am playing with Google’s most powerful large language model — Gemini 1.5 Pro, Google suddenly unveiled its latest contribution to the open-source LLM community: Gemma, which comes with a family of lightweight, text-generation, decoder-only models with state-of-the-art performance, trained on a massive dataset of 6 trillion tokens. Available in 2 billion and 7 billion parameter scales, with both base and instruct versions, LLM app developers now have another brand new option on the shelf to upgrade the apps with fair generation quality at less cost. Trained with the inspiration from Gemini’s techniques, these models achieve impressive benchmark scores, and their open-source nature allows for further fine-tuning and customization.

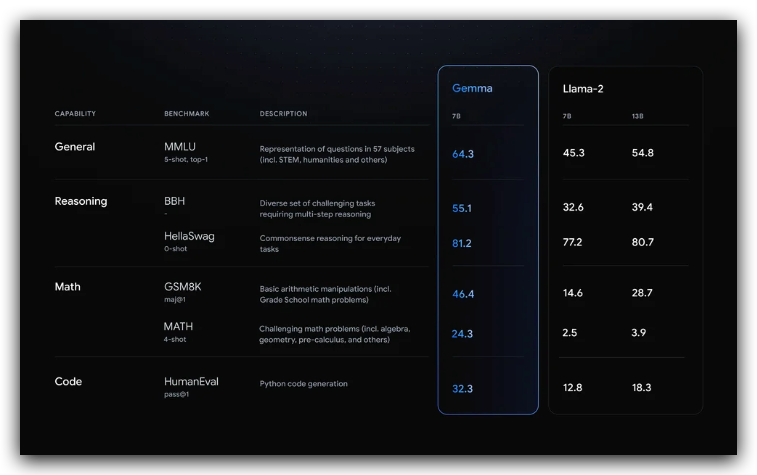

As a SOTA open-source language model, it can never run away from comparing with the Llama-2 models and of course, should “beat” them in many benchmarks for various generative capabilities. From Gemma’s released report, it easily defeats Llama-2–7B and even the 13B model on categories of General, Reasoning, Math, and Code with overwhelming results. When I checked the same data of Mistral-7B which commonly stands on the top of 7B models, it surprised me that the Gemma-7B almost won Mistral-7B entirely on these categories.

You will find more details from Google’s blog post.

The data is data, as a LLM app developer, I understand that the real value of the commercial lies in its actual quality in practice under complex tasks. Therefore, I am going to implement a multi-agent application using AutoGen framework, showing how to create agents with Gemma models. From the experiment, hopefully, we can understand whether this model is qualified for reasoning and orchestration capability to complete more complex tasks than simple one-answer generation.

AutoGen + Gemma models

This demonstration will be based on remote inference API for those who don’t have GPU resources and another tutorial for local deployment for those who have computational hardware with data privacy concerns will be published very soon.

In the application, I am going to create a scenario of 1 on 1 conversation to test the model’s overall generation quality, especially on coding, and another scenario of a group chat with multiple agents to see how well (or bad) the orchestration works.

Gemma API from OpenRouter

The implementation remotely with Gemma is by directly calling model API from a third-party inference service which aims to help developers who have no GPU locally create apps using open-source language models, the same way as developing GPT or Gemini models, but at a much lower cost.

In this demo, I will use the service from a platform called OpenRouter which has immediately provided an inference endpoint for the Gemma-7B-it (instruction) model right after Google’s release and most importantly, for free!

And, if you don’t want to tolerate the rate limit (10 requests/mins) for the free version, you can also try the paid version for only $0.13/M tokens cost for input and output. It’s a very competitive price even for individual play.

Let’s drop a breakpoint here and step through the code.

LLM Configuration

The AutoGen framework supports the requests and responses through OpenAI API by default, and fortunately, OpenRouter supports the OpenAI-compatible API which means the developers don’t have to implement additional web requests for its inference service.

Therefore, we should install the AutoGen package that packs OpenAI functions first.

!pip install --quiet --upgrade pyautogenNext, we should create an LLM config as a necessary step for the AutoGen application. Define an environment variable for the config list first.

import os

os.environ['OAI_CONFIG_LIST'] ="""[{"model": "google/gemma-7b-it",

"api_key": "sk-or-v1-Your OpenRouter KEY",

"base_url": "https://openrouter.ai/api/v1",

"max_tokens":1000}]"""Make sure the model name and path match what is on the model page together with the API key that you created in your OpenRouter account. Don’t forget to replace the base_url with the new OpenRouter’s endpoint, otherwise, your request will be directed to OpenAI.

Then define the LLM_config for agents.

import autogen

llm_config={

"timeout": 600,

"cache_seed": 28, # change the seed for different trials

"config_list": autogen.config_list_from_json(

"OAI_CONFIG_LIST",

filter_dict={"model": ["google/gemma-7b-it"]},

),

"temperature": 0.7,

}Case 1–1 on 1 conversation

OK, let’s construct the two agents.

from autogen.agentchat.contrib.math_user_proxy_agent import MathUserProxyAgent

# create an AssistantAgent instance named "assistant"

assistant = autogen.AssistantAgent(

name="coder",

system_message="You are good at coding",

llm_config=llm_config,

)

mathproxyagent = MathUserProxyAgent(

name="mathproxyagent",

human_input_mode="NEVER",

is_termination_msg=is_termination_msg=lambda x: x.get("content", "") and x.get("content", "").rstrip().endswith("TERMINATE"),

code_execution_config={

"work_dir": "work_dir",

"use_docker": False,

},

max_consecutive_auto_reply=3,

)The first agent “coder” is an assistant agent who is driven by the Gemma model to generate code-related responses. The second one is a special user proxy agent that can generate well-structured prompts for math problems and execute code in its environment as well.

Simply prompt them with an inequality calculation.

task1 = """

Find all $x$ that satisfy the inequality $(2x+10)(x+3)<(3x+9)(x+8)$. Use code.

"""

mathproxyagent.initiate_chat(assistant, problem=task1)Case 1 Result

From the output, basically, the conversation workflow can work with Gemma. The “coder” generated a snippet of Python code that followed the instructions from the math user proxy agent.

Keep reading with a 7-day free trial

Subscribe to Lab For AI to keep reading this post and get 7 days of free access to the full post archives.