How to Create a Realtime Multimodal App Running in Google AI Studio

A Quick Guide for Building a Gemini App by Javascript SDK

If you are a developer for LLM applications, especially focusing on real-time voice and video interactions, the Gemini Live API is a must-try for you. Many of us have been exploring its multimodal capabilities with high responsiveness speed and extensive session continuation, and tools usage features to build attractive apps from PoC to go-live projects. For the quick development stage, building a server environment for deploying your app is always a necessary but annoying work, so Google AI Studio provides an excellent sandbox called “Starter Apps,” allowing developers to prototype in real-time without backend overhead and easily share their work and source code.

To create an app, the user can easily click the “Create an app“button and then choose a template from the template library.

Unfortunately, even though there is a “Live” template, the template is a text-based, real-time chat app instead of a truly live, multimodal chat (combining continuous voice input/output with dynamic image context), and the full voice/image functionality is also a missing piece in its showcase demos.

To fill up this gap, I made this tutorial to detail the creation/cloning of a "Multimodal Live Chat" starter app directly within Google AI Studio. My goal is to demonstrate how you can build an application where users can:

Engage in a real-time voice conversation with the Gemini Live API.

Upload an image that is then periodically sent to the model, providing ongoing visual context.

Receive spoken audio responses from Gemini that can consider both the voice and image inputs.

This approach addresses the desire for a more contextual and interactive multimodal experience, all within the convenient, browser-based environment of AI Studio.

Since the coding language should be JavaScript in the Google AI Studio, this tutorial can also be a guide for you to build a JS/TS-based application under a mainstream framework like React, calling the Gemini Live SDK directly.

Here is the video for this demo:

System Architecture and Data Flow

Our application operates entirely client-side, leveraging the browser's capabilities and the Gemini JavaScript SDK.

Diagram Description:

User Input: The user interacts via voice (microphone) and image uploads.

Client-Side Processing:

Voice: Raw microphone audio is captured by the Web Audio API. An AudioWorkletProcessor handles the crucial tasks of resampling this audio to 16 kHz (Gemini requires) and converting it to 16-bit Linear PCM. This processed audio is then Base64 encoded in the main thread.

Image: Uploaded images are read by the FileReader API, and their Base64-encoded data and MIME type are stored.

Gemini SDK Interaction: Both the Base64 audio chunks and the Base64 image data (sent periodically) are transmitted to the Gemini API using the sendRealtimeInput method over a persistent WebSocket connection established by

ai.live.connect().Gemini API & Response: The Gemini model processes these multimodal inputs. Audio responses are sent back as Base64 encoded PCM.

Output: The application decodes the incoming audio and plays it back to the user via the Web Audio API.

This client-centric architecture is ideal for AI Studio's sandboxed environment.

Code Walkthrough

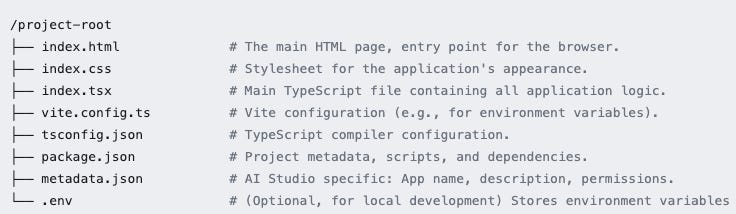

Let's explore the key TypeScript code that orchestrates this multimodal interaction. Before that, after an empty project creation, we should make sure we have or create those files to enable the full function and appearance of this project.

Keep reading with a 7-day free trial

Subscribe to Lab For AI to keep reading this post and get 7 days of free access to the full post archives.