For a Multi-Agent Framework, CrewAI has its Advantages Compared to AutoGen

A Quick Guide to the App Development with CrewAI and its comparison to AutoGen

As it becomes increasingly apparent, leveraging a multi-agent architecture in the construction of LLM-powered applications performs superiorly compared to a solo agent — even with impeccable prompting strategies. Having investigated AutoGen along with its developmental tips through several episodes, I am excited to guide you today through the exploration of another fresh and interesting multi-agent framework — CrewAI. This framework works as it is named by orchestrating an assembly of AI agents — your crew — designed to collaboratively accomplish intricate tasks within your application, one firm step at a time.

You might be wondering, why bother with yet another framework that facilitates a bunch of AI agents, assigns them roles, and sets them up to chat together to come up with a final answer? Well, after spending some time tinkering with it, I can say that CrewAI stands out for its advantages.

Overall advantages

One advantage comes from its design principle it is more practical and production-oriented. It sacrifices a little flexibility and randomness on the speaker’s reaction and orchestration but gains more certainty on the agent’s capability, task, and speaking turn. So far, the only orchestration strategy is “sequential” with a future release plan of “consensual” and “hierarchical”.

When we dive deeper into this framework and its code in our next chapter, we will find it’s super easy to ensure the tasks are handled by relevant agents and processed in the defined sequence. You definitely will not see any vivid interactions in CrewAI among the agents like one agent correcting another, multiple speakings from one agent. Those interactions are good for experimental or demonstration but less useful for real-world LLM products which need efficient, deterministic, and cost-effective task completion. Therefore, CrewAI prioritizes a streamlined and reliable approach. What it brings to the table is a robust group chat where each AI agent knows precisely what to do and what goals they have.

The other and most key advantage in my mind is its thriving tools, supports, and rich resources that can be leveraged for constructing agents and tasks. It does not normally make sense that such a new tool could do that. Surprisingly, it does. That comes from its smart design under the umbrella of LangChain — a mature LLM framework that’s already equipped LLM app developers with a wealth of tools and peripherals to enhance what language models can do.

CrewAI proves accommodating for LLM app developers who are familiar with LangChain, or those who have already built applications on top of it. For them, integrating their existing solo agents into the CrewAI framework can be achieved with relative ease. In comparison, the learning curve for AutoGen might be steeper, which requires more time to understand its usage and integrate agents effectively.

Code Walkthrough

Now let’s dive deeper to see how to achieve those advantages in the coding practice.

1. Agent Orchestration

To prove the ease of the agent orchestration for a sequential task, I am going to assign to the agent group the same task as my previous AutoGen demonstration which asks AI agents to generate a travel plan with proper activities considering the weather conditions and attached insurance item list.

The group chat constructs a suite of AI agents:

Weather reporter, who provides the weather conditions based on the locations and dates. We will activate tool usage in the next chapter to feed real-time data.

Activity agent, who provides recommendations for travel activities based on the location and weather conditions.

Travel advisor, who generates a travel itinerary including every day’s activities.

Insurance agent, who recommends insurance options tailored to the planned activities and potential risks.

To elaborate, the communicative sequence should operate in sequence, as Weather reporter -> Activity agent -> Travel advisor -> Insurance agent.

Let’s facilitate them in code.

Step 1 — Install the CrewAI package first.

pip install crewaiStep 2 — Import and Setup

Since the low-level implementation relies on the LangChain library, we must import the relevant LangChain packages in addition to the CrewAI.

import os

from crewai import Agent, Task, Crew, Process

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(model="gpt-4-1106-preview")Here the llm is defined with the GPT-4 model.

Step 3 — Construct the agents

The construction process is to mainly set up system prompts for each agent. CrewAI separates the system prompt (and maybe agent description) into several sections. Look at the code of the weather reporter:

Weather_reporter = Agent(

role='Weather_reporter',

goal="""provide historical weather

overall status based on the dates and location user provided.""",

backstory="""You are a weather reporter who provides weather

overall status based on the dates and location user provided.

You are using historical data from your own experience. Make your response short.""",

verbose=True,

allow_delegation=False,

llm=llm,

)Normally you should fill in the role, goal, and backstory to construct an agent. These three sections are easy to understand by their names, where the role refers to the name of the agent, the goal refers to the reason for creating this agent, and the backstory of what the agent is capable of. The allow_delegation is defined for the case when delivering a task to the next agent if this one cannot handle it.

Following the same method, let’s construct the rest three agents.

from langchain.agents import load_tools

human_tools = load_tools(["human"])

activity_agent = Agent(

role='activity_agent',

goal="""responsible for activities

recommendation considering the weather situation from weather_reporter.""",

backstory="""You are an activity agent who recommends

activities considering the weather situation from weather_reporter.

Don't ask questions. Make your response short.""",

verbose=True,

allow_delegation=False,

llm=llm,

)

travel_advisor = Agent(

role='travel_advisor',

goal="""responsible for making a travel plan by consolidating

the activities and require human input for approval.""",

backstory="""After activities recommendation generated

by activity_agent, You generate a concise travel plan

by consolidating the activities.""",

verbose=True,

allow_delegation=False,

tools=human_tools,

llm=llm,

)

Insure_agent = Agent(

role='Insure_agent',

goal="""responsible for listing the travel plan from advisor and giving the short

insurance items based on the travel plan""",

backstory="""You are an Insure agent who gives

the short insurance items based on the travel plan.

Don't ask questions. Make your response short.""",

verbose=True,

allow_delegation=False,

llm=llm,

)Human interaction is a basic component in the governance of multi-agent applications to ensure that AI agents speak under appropriate supervision. Unlike the AutoGen framework that needs developers to construct a user proxy agent to incorporate human interaction, the LangChain integration in CrewAI offers a streamlined approach. By loading a tool named “human” into the tools parameter, human input is seamlessly facilitated by tools=human_toolsin agent travel_advisor’s definition. What we should do next is to write this human prompt into the description of the Task object which we will introduce in the next step.

Step3 — Construct the tasks

In CrewAI, there is no “overall” task for the entire group, instead, you should assign individual tasks for each agent by the Task() method.

task_weather = Task(

description="""Provide weather

overall status in Bohol Island in Sept.""",

agent=Weather_reporter

)

task_activity = Task(

description="""Make an activity list

recommendation considering the weather situation""",

agent=activity_agent

)

task_insure = Task(

description="""1. Copy and list the travel plan from task_advisor. 2. giving the short

insurance items based on the travel plan considering its activities type and intensity.""",

agent=Insure_agent

)

task_advisor = Task(

description="""Make a travel plan which includes all the recommended activities, and weather,

Make sure to check with the human if the draft is good

before returning your Final Answer.

.""",

agent=travel_advisor

)Please note that you must assign explicitly an agent for each task by using agent=….

If you prefer human interaction for comments on the travel plan, you can try appending the text “Make sure to check with the human if the draft is good before returning your final answer”.

Step4 — Construct the crew and kickoff

Now, it’s time to wrap them up into a capable crew with an orchestration strategy.

crew = Crew(

agents=[Weather_reporter, activity_agent, travel_advisor, Insure_agent, ],

tasks=[task_weather, task_activity, task_advisor, task_insure, ],

verbose=2

)

result = crew.kickoff()In the sequential strategy which is the only choice right now, the orders in the agents list and tasks list are exactly the task execution order of these agents. From my test, you have to make sure both orders are aligned, but I think the design needs to be improved to keep the tasks list the only reference for task execution order. Set verbose to 2 will make the system print [Info] and [Debug] information.

When everything is ready, simply call the kickoff() function can start the group generation.

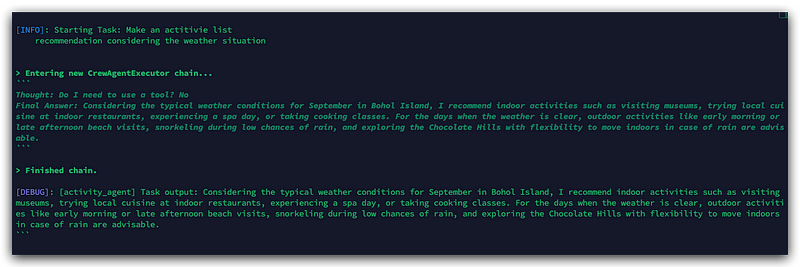

From the ongoing print, you will see the familiar output from LangChain’s ReAct process for each task.

Finally, the final answer shows the expected travel plan:

2. Agent with Tools

Now comes the most fun part of CrewAI’s agent construction — the use of tools.

When we were developing AI group chat via the AutoGen framework, it was very convenient to use OpenAI’s function calling feature to call external API or custom functions to extend the knowledge of the agents. Unfortunately, the function calling is only available for GPT models, and very few fine-tuned open-source models. By using the LangChain framework, the tool interface is naturally supported for interacting with the CrewAI agent in the real world and is available for all compatible models. Although the reliability of tools is lower than function calls, it is very applicable to open-source models when the function of the tool does not require complicated input parameters.

Keep reading with a 7-day free trial

Subscribe to Lab For AI to keep reading this post and get 7 days of free access to the full post archives.